Machine learning (ML) has quickly become one of the most important technologies of our time. It underlies products like ChatGPT, Netflix recommendations, self-driving cars, and email spam filters. To help you understand this pervasive technology, this guide covers what ML is (and what it isn’t), how it works, and its impact.

Table of contents

What is machine learning?

To understand machine learning, we must first understand artificial intelligence (AI). Although the two are used interchangeably, they are not the same. Artificial intelligence is both a goal and a field of study. The goal is to build computer systems capable of thinking and reasoning at human (or even superhuman) levels. AI also consists of many different methods to get there. Machine learning is one of these methods, making it a subset of artificial intelligence.

Machine learning focuses specifically on using data and statistics in the pursuit of AI. The goal is to create intelligent systems that can learn by being fed numerous examples (data) and that don’t need to be explicitly programmed. With enough data and a good learning algorithm, the computer picks up on the patterns in the data and improves its performance.

In contrast, non-ML approaches to AI don’t depend on data and have hardcoded logic written in. For example, you could create a tic-tac-toe AI bot with superhuman performance by just coding in all the optimal moves (there are 255,168 possible tic-tac-toe games, so it would take a while, but it’s still possible). It would be impossible to hardcode a chess AI bot, though—there are more possible chess games than atoms in the universe. ML would work better in such cases.

A reasonable question at this point is, how exactly does a computer improve when you give it examples?

How machine learning works

In any ML system, you need three things: the dataset, the ML model, and the training algorithm. First, you pass in examples from the dataset. The model then predicts the right output for that example. If the model is wrong, you use the training algorithm to make the model more likely to be right for similar examples in the future. You repeat this process until you run out of data or you’re satisfied with the results. Once you complete this process, you can use your model to predict future data.

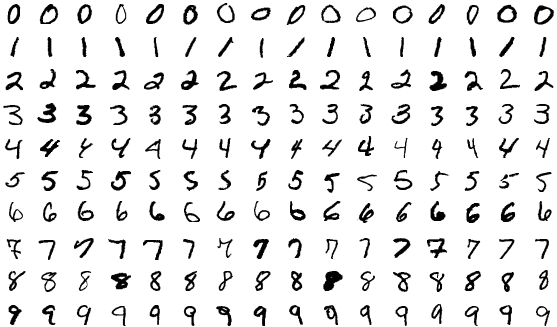

A basic example of this process is teaching a computer to recognize handwritten digits like the ones below.

You collect thousands or hundreds of thousands of pictures of digits. You start with an ML model that hasn’t seen any examples yet. You feed the images into the model and ask it to predict what number it thinks is in the image. It will return a number between zero and nine, say a one. Then, you essentially tell it, “This number is actually five, not one.” The training algorithm updates the model, so it’s more likely to respond with five the next time. You repeat this process for (almost) all the pictures available, and ideally, you have a well-performing model that can recognize digits correctly 90% of the time. Now you can use this model to read millions of digits at scale faster than a human could. In practice, the United States Postal Service uses ML models to read 98% of handwritten addresses.

You could spend months or years dissecting the details for even a tiny part of this process (look at how many different versions of optimization algorithms there are).

Types of machine learning

Machine learning can be broadly categorized into five main types based on how models learn from data: supervised, unsupervised, semi-supervised, reinforcement, and the more recent addition, self-supervised learning. These categories are primarily distinguished by whether the model is given labeled data (data with correct answers) and how it interacts with its environment.

1 Supervised learning

Supervised learning involves models being trained on labeled data. For example, in a handwritten digit recognition task, the model is told, “This is a five,” allowing it to learn the explicit relationship between inputs and outputs. The model can predict discrete labels (e.g., “cat” or “dog”) or continuous values (e.g., house prices based on features like number of rooms).

2 Unsupervised learning

In Unsupervised learning, models work with unlabeled data, identifying patterns without being told the correct answers. For instance, given a collection of animal images, the model could group them into categories based on visual similarities, even though it wasn’t explicitly told which images are cats or dogs. Clustering, association rules, and dimensionality reduction are core methods in unsupervised ML.

3 Semi-supervised learning

Semi-supervised learning combines elements of supervised and unsupervised learning. It uses a large amount of unlabeled data along with a smaller set of labeled data to train the model. The model learns from the labeled data first, then applies what it has learned to assign labels to the unlabeled data.

4 Reinforcement learning

Reinforcement learning involves models learning by interacting with an environment. Instead of being given correct answers, the model receives feedback in the form of rewards based on its actions. It refines its strategy over time to maximize the reward. A well-known example is AlphaGo Zero, which learned to play Go by playing against itself, improving through trial and error.

5 Self-supervised learning

self-supervised learning, a recent development, bridges supervised and unsupervised learning. Models are given unlabeled data but generate labels from the data itself. This technique is crucial for large language models like GPT. For example, during training, GPT predicts the next word in a sentence. Given the phrase “The cat sat on the mat,” the model is asked to predict the word following “The,” and by comparing its prediction to the actual sentence, it learns to recognize patterns. This allows for scalable learning from vast amounts of data.

Applications of machine learning

Any problem or industry that has lots of data can use ML. Many industries have seen extraordinary results from doing so, and more use cases are arising constantly. Here are some common use cases of ML:

Writing

ML models power generative AI writing products like Grammarly. By being trained on large amounts of great writing, Grammarly can create a draft for you, help you rewrite and polish, and brainstorm ideas with you, all in your preferred tone and style.

Speech recognition

Siri, Alexa, and the voice version of ChatGPT all depend on ML models. These models are trained on many audio examples, along with the corresponding correct transcripts. With these examples, models can turn speech into text. Without ML, this problem would be almost intractable because everyone has different ways of speaking and pronunciation. It would be impossible to enumerate all the possibilities.

Recommendations

Behind your feeds on TikTok, Netflix, Instagram, and Amazon are ML recommendation models. These models are trained on many examples of preferences (e.g., people like you liked this movie over that movie, this product over that product) to show you items and content that you want to see. Over time, the models can also incorporate your specific preferences to create a feed that appeals specifically to you.

Fraud detection

Banks use ML models to detect credit card fraud. Email providers use ML models to detect and divert spam email. Fraud ML models are given many examples of fraudulent data; these models then learn patterns among the data to identify fraud in the future.

Self-driving cars

Self-driving cars use ML to interpret and navigate the roads. ML helps the cars identify pedestrians and road lanes, predict other cars’ movement, and decide their next action (e.g., speed up, switch lanes, etc.). Self-driving cars gain proficiency by training on billions of examples using these ML methods.

Advantages of machine learning

When done well, ML can be transformative. ML models can generally make processes cheaper, better, or both.

Labor cost efficiency

Trained ML models can simulate the work of an expert for a fraction of the cost. For example, a human expert realtor has great intuition when it comes to how much a house costs, but that can take years of training. Expert realtors (and experts of any kind) are also expensive to hire. However, an ML model trained on millions of examples could get closer to the performance of an expert realtor. Such a model could be trained in a matter of days and would cost much less to use once trained. Less experienced realtors can then use these models to do more work in less time.

Time efficiency

ML models aren’t constrained by time in the same way humans are. AlphaGo Zero played 4.9 million games of Go in three days of training. This would take a human years, if not decades, to do. Because of this scalability, the model was able to explore a wide variety of Go moves and positions, leading to superhuman performance. ML models can even pick up on patterns experts miss; AlphaGo Zero even found and used moves not usually played by humans. This doesn’t mean experts are no longer valuable though; Go experts have gotten much better by using models like AlphaGo to try new strategies.

Disadvantages of machine learning

Of course, there are also downsides to using ML models. Namely, they’re expensive to train, and their results aren’t easily explainable.

Expensive training

ML training can get expensive. For example, AlphaGo Zero cost $25 million to develop, and GPT-4 cost more than $100 million to develop. The main costs for developing ML models are data labeling, hardware expenses, and employee salaries.

Great supervised ML models require millions of labeled examples, each of which has to be labeled by a human. Once all the labels are collected, specialized hardware is needed to train the model. Graphics processing units (GPUs) and tensor processing units (TPUs) are the standard for ML hardware and can be expensive to rent or buy—GPUs can cost between thousands and tens of thousands of dollars to purchase.

Lastly, developing excellent ML models requires hiring machine learning researchers or engineers, who can demand high salaries due to their skills and expertise.

Limited clarity in decision-making

For many ML models, it’s unclear why they give the results they do. AlphaGo Zero can’t explain the reasoning behind its decision-making; it knows that a move will work in a specific situation but not why. This can have significant consequences when ML models are used in everyday situations. ML models used in healthcare may give incorrect or biased results, and we may not know it because the reason behind its results is opaque. Bias, in general, is a huge concern with ML models, and a lack of explainability makes the problem harder to grapple with. These problems especially apply to deep learning models. Deep learning models are ML models that use many-layered neural networks to process the input. They are able to handle more complicated data and questions.

On the other hand, simpler, more “shallow” ML models (such as decision trees and regression models) don’t suffer from the same disadvantages. They still require lots of data but are cheap to train otherwise. They are also more explainable. The downside is that such models can be limited in utility; advanced applications like GPT require more complex models.

Future of machine learning

Transformer-based ML models have been all the rage for the past few years. This is the specific ML model type powering GPT (the T in GPT), Grammarly, and Claude AI. Diffusion-based ML models, which power image-creation products like DALL-E and Midjourney, have also received attention.

This trend doesn’t seem to be changing anytime soon. ML companies are focused on increasing the size of their models—bigger models that have better capabilities and bigger datasets to train them on. GPT-4 had 10 times the number of model parameters that GPT-3 had, for example. We’ll likely see even more industries use generative AI in their products to create personalized experiences for users.

Robotics is also heating up. Researchers are using ML to create robots that can move and use objects like humans. These robots can experiment in their environment and use reinforcement learning to quickly adapt and hit their goals—for example, how to kick a soccer ball.

However, as ML models become more powerful and pervasive, there are concerns about their potential impact on society. Issues like bias, privacy, and job displacement are being hotly debated, and there is a growing recognition of the need for ethical guidelines and responsible development practices.

Conclusion

Machine learning is a subset of AI, with the explicit goal of making intelligent systems by letting them learn from data. Supervised, unsupervised, semi-supervised, and reinforcement learning are the main types of ML (along with self-supervised learning). ML is at the core of many new products coming out today, such as ChatGPT, self-driving cars, and Netflix recommendations. It can be cheaper or better than human performance, but at the same time, it is expensive initially and less explainable and steerable. ML is also poised to grow even more popular over the next few years.

There are a lot of intricacies to ML, and the opportunity to learn and contribute to the field is expanding. In particular, Grammarly’s guides on AI, deep learning, and ChatGPT can help you learn more about other important parts of this field. Beyond that, getting into the details of ML (such as how data is collected, what models actually look like, and the algorithms behind the “learning”) can help you incorporate it effectively into your work.

With ML continuing to grow—and with the expectation that it will touch almost every industry—now is the time to start your ML journey!