Whether they’re building models that predict bias or devising AI agents that help developers write more robust tests, Grammarly’s machine learning interns have an impact from day one. In the program, interns receive practical, hands-on experience with mentorship, equipping them with valuable skills for their future careers.

This summer, we welcomed 29 interns across our global offices. In this blog post, we’ll spotlight three interns from our machine learning team. We’ll learn about each intern’s journey to Grammarly, unpack their internship project, and share how they’ve grown as a result of the internship.

Kelly Deng

Fast facts

University: Cornell Tech

Your favorite food treat: Matcha ice cream

Journey to Grammarly

Kelly was introduced to machine learning during her freshman year, when she took an introductory Python class. “I was fascinated by how these algorithms could extract so much insight from various data and make predictions about things we haven’t seen. As a human, I wouldn’t be able to do that myself—but I could train a model to do it for me, and that idea really hooked me,” she explained.

That spark quickly developed into a passion for natural language processing (NLP) and large language models (LLMs). When it came time to choose an internship, Grammarly was an obvious choice, since it sat at the intersection of both her interests. Beyond that, she was already a longtime user of the product, and the recruiting process only reinforced her enthusiasm. “Every step of the way, I received timely updates and thoughtful feedback, which really helped during a stressful recruiting season,” she said.

The internship project

For her internship project, Kelly built a multiclass classification model to interpret user intent within an internal system. The model takes a user prompt (e.g., “Project Panda”) and returns a probability distribution of which internal resources or actions they might be seeking. “This would help Grammarly better understand user intent from natural language prompts, and we can use this capability to enhance the user experience,” Kelly explained. Today, the model is fully trained and deployed to production, but accomplishing this wasn’t straightforward.

“Our training dataset consisted of user search queries and the tool the user would likely click on, like Confluence, Slack, or Jira. However, the annotations were extremely imbalanced. For example, the ‘Confluence’ class had about 100 times more examples than ‘Jira,’ which made it hard to achieve good performance,” explained Kelly.

Kelly tackled this problem by using standard techniques for imbalanced training data, such as focal loss and balanced sampling, but these techniques didn’t improve performance. “These methods also require careful hyperparameter tuning, which is time-consuming,” she said. After discussing with her internship mentor, Luke Salamone, Kelly generated synthetic queries for the minority classes, which balanced the dataset and helped her reach optimal model performance.

But training the model was only half the battle. To deploy the model into production, Kelly needed to learn a new codebase and TypeScript. With Luke’s patient support and debugging help, she successfully got the model running in production. “He offered guidance and suggestions, but also gave me the freedom to explore different solutions and choose the approach I felt worked best. It really helped me grow,” she said.

Learning and growth

During her internship, Kelly didn’t just learn about what it takes to build software successfully in production—she also learned about the value of feedback and being confident in asking for input.

“I used to feel shy about sharing my work with others, worried that it wasn’t polished enough,” she explained. “But through my time at Grammarly, I noticed how everyone here is intentional about giving and receiving feedback; it’s really part of the culture here. So, I started sharing my progress earlier, asking for input more regularly, and even demoing my work during team syncs and company-wide meetings. This has helped me become more confident and collaborative in how I work.”

Priyam Basu

Fast facts

University: University of Washington

Your favorite fictional character: Patrick from SpongeBob SquarePants—“He’s so energetic!”

Journey to Grammarly

Ever since he was a child, Priyam has been fascinated by the idea of creating “intelligent” computers that can perform tasks autonomously, which naturally led him to explore AI and machine learning. “I was also inspired by, of course, Terminator,” he noted, because no future engineer is immune to the pull of awe-inspiring, sentient robots.

This passion took him to grad school at the University of Washington. When it came time to find a summer internship, he wanted to work at a company in the AI space that also had a meaningful mission—Grammarly checked both those boxes. “I loved Grammarly’s focus on creating impact while doing it ethically,” he added.

The internship project

Priyam’s internship project focused on developing evaluation methodologies for Grammarly’s AI detection services to assess whether there was potential bias against specific demographic groups, such as people for whom English is not their first language. To create this evaluation system, Priyam needed two things: diverse text samples from different demographic groups, and automated tests to measure whether AI detectors exhibited bias against these groups.

“There weren’t any high-quality training datasets, so I realized I’d have to build my own,” Priyam explained. “Fortunately, in my research, I found a bias-free open-source dataset of text writing from various demographic groups. I used AI to introduce bias into the dataset, and used the AI-generated samples as my training data.”

Priyam’s next step was to define the evaluation methodology. “We didn’t have clear scoping of the types of biases that we wanted to evaluate or the metrics to measure them.” He tapped the broader team to help him brainstorm and prioritize which biases to focus on.

As a result of this collaboration, Priyam successfully shipped the evaluation pipelines. He also collaborated with the team to write a research paper that evaluates a range of AI detectors for bias across various demographic groups.

Learning and growth

For Priyam, the most significant growth was learning to adapt to the fast-paced nature of the tech industry. “Things move so quickly here, and I had to learn how to be more efficient to match that pace. While it was challenging, it was a good push; it made me realize that you can often build things faster than you originally planned,” he said.

He credits his colleagues, Yunfeng Zhang and Justin Hugues-Nuger, for their support and mentorship in helping him make this shift. “They were extremely supportive, whether by answering my countless questions, filling me in on important customer or business context, or helping me find the right documentation,” he said.

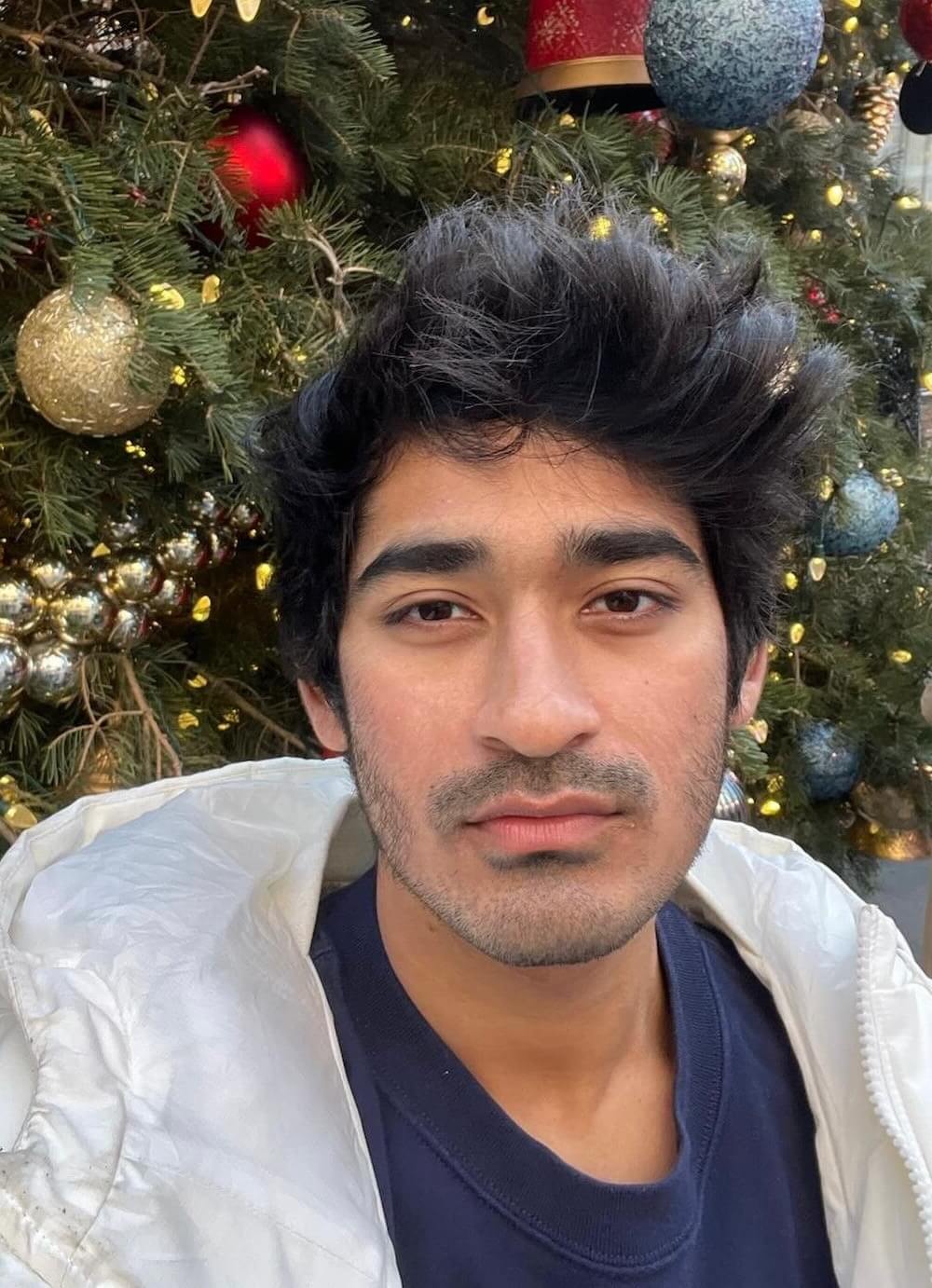

Sameer Komoravolu

Fast facts

University: University of Illinois Urbana-Champaign

A skill you want to master: Brazilian jiu-jitsu

Journey to Grammarly

Sameer has always dreamt of applying math and physics to create new things that help people. This interest naturally drew him to machine learning—and eventually to Grammarly. “I wanted to learn from industry leaders while working on meaningful projects, so working here felt like the perfect fit. Plus, as a Grammarly user since middle school, I’ve seen firsthand the impact of the product,” he explained.

The internship project

Sameer’s project required him to build agent-testing agents (ATA), which are AI agents that can break other AI agents (you read that right). Developers can use these ATAs to identify edge cases with AI agents that might have been overlooked by manual testing. For example, suppose a developer makes an AI agent to handle product questions from cross-functional teams, but the agent struggles with complex queries. An ATA could automatically generate increasingly difficult questions to find exactly where the agent breaks down, then provide specific feedback on how developers could fix the issue.

The biggest hurdle was moving beyond rigid, pre-written test scenarios to dynamically generated ones. “With hard-coded testing criteria, the ATA was only useful in certain domains. We wanted to make it more generally adaptable, which required us to dynamically generate testing scenarios,” Sameer said. His mentor, Khalil Mrini, provided crucial guidance: “He directed me to over 20 papers that inspired the test-generation node, making me rigorously justify each design decision I made so it would hold up in the paper.”

With Khalil’s help, Sameer combined multiple techniques from these papers by building a feedback loop using a deep-thinking model (an LLM with chain-of-thought reasoning). However, this architecture quickly became unscalable, so he switched to parallel execution, allowing multiple tests to run simultaneously. He also refined the architecture by reducing context sizes and using expensive, high-powered models only when necessary.

By the end of his internship, Sameer had built a working ATA prototype with a demo for his team and documented his findings in a research paper.

Learning and growth

Coming into the internship, Sameer was unsure about his career next steps. “I was conflicted about how to proceed with my career: Should I continue in the industry or return to school?”

Unexpectedly, the internship helped him arrive at some clarity. “After speaking with my mentors and teammates, I realized that these paths are not so different, and I can explore both research and industry opportunities to see what I care about. As long as I’m taking advantage of learning opportunities, I will keep growing,” he explained.

This focus on growth also extended to non-ML parts of the internship: “We went rock climbing with the other interns, and I did not expect to learn how to boulder through the internship. It was pretty cool!”

Looking ahead

Our machine learning interns’ projects have already had an incredible impact in accelerating Grammarly’s mission. More importantly, these projects are taking on a life of their own—we can’t wait to see interns’ research papers published and their models deployed in our products.

If you’re interested in applying to Grammarly’s internship program next year, we’d love to hear from you. Stay tuned for updates on our Careers page for next year’s program.